SQL Server Implicit Conversion – A Silent Perf Killer

I recently had the opportunity to work with a developer at the day job. He was using a loop (for what he was doing, it makes sense 🙂 ), and he had a query that ran great when he stripped the question out of the process, but when running in the loop, it took 120ms and read 5079 pages of data. This running repeatedly in a loop had a considerable cost to the total process time of this batch process. He was confused; it was the same query. What was happening??

I asked if the queries were the same, and he said yes; the only difference was that he was supplying the variable value instead of using the variable…

AHA! The smell of implicit conversion started to fill the air. He showed me the procedure, and it declared the variable as NVARCHAR. The column in the table was a VARCHAR. Change of his SQL script from the system to display the variable as NVARCHAR just like the loop alone showed the IO_Statistics that matched the earlier profiler results: more reads.

Why?

Implicit Conversion!

When comparing data across data types, SQL Server may have to perform an implicit conversion depending on precedence constraints. So? For most of these implicit conversions (if not all), you have the potential to turn your query into a non-index-friendly one. A properly formed where clause that can use an index is sometimes referred to as a SARG or Search Argument. Various no-no’s can prevent a query from being a SARG. Favorite things like using a function to the left of the where clause and often forgotten things like mismatching your data types. SQL’s query optimizer has to convert the data, and the index becomes useless because the data is not indexed for every possible data type it could be converted to.

Let’s watch this implicit conversion happen… First, create a table:

USE tempdbGO CREATE TABLE TestTable(col1 INT IDENTITY(1,1),col2 VARCHAR(10))GO

And populate that table with some random data

DECLARE @Ascii INTDECLARE @Text VARCHAR(10) DECLARE @i INTSET @i = 1WHILE @i < 100000 BEGIN SET @Text = '' WHILE len(@Text) < 10 BEGIN SET @Ascii = ROUND(120*RAND(),0) IF @Ascii BETWEEN 97 AND 122 SET @Text = @Text + CHAR(@Ascii) END INSERT INTO TestTable (col2) VALUES (@Text) SET @i= @i+1END

Then we can create some indexes (or makes, your choice, I say indexes…)

CREATE CLUSTERED INDEX col1_identityON TestTable (Col1); CREATE NONCLUSTERED INDEX col2ON TestTable (col2);

Now the fun begins. We have a table with random character data stored in a VARCHAR column. The queries below should show us the risk of not matching our data types between columns and variables and even between columns that should be related in joins.

First Up – Let’s query that using an NVARCHAR variable… IO and Time Statistics will be enabled to show us the difference

SET STATISTICS IO ONGOSET STATISTICS TIME ONGO DECLARE @variable NVARCHAR(10)SET @variable = 'aikoqmuiby' SELECT * FROM TestTableWHERE Col2 = @Variable;

When I ran that query, I saw it did 301 reads and the elapsed time was 103ms. Is that horrible? You may not think so, but hopefully, you took my advice when I gave when I talked of Empirical Evidence, and you enjoy testing your code and thinking through options and possibilities.

Remember, this is a small table (100k rows and each row is relatively narrow). So you have to ask yourself the question in terms of what it is doing… It did 300 reads and took 101 ms to bring back one row on my machine (with the random nature, you may get 0 or more rows, but with such a small sample for the random data, you will probably get none, you’ll see close to the same reads and most likely duration, however). So is that acceptable to me? Not really. What if the table grows? I added an index to that column. I would expect to see an index seek. The non-clustered index includes the clustered key from Col1, a two-column table, so the non-clustered index on Col2 handles the query…

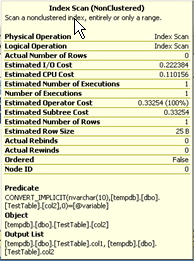

But when I look at the query plan, I see that it isn’t using an index seek but an index scan. If I hover over that scan, I see something that looks a bit odd:

I am searching for the predicate CONVERT_IMPLICIT(nvarchar(10), Col2,0)=@variable. I never typed CONVERT anywhere, hence the “IMPLICIT.” The column is stored as a VARCHAR; my variable is an NVARCHAR. Yes, those deal with variable character lengths string data, but they are not the same. A look at SQL’s data type precedence helps show you when and where/why implicit conversions happen. Because of the differences between these two data types, they can’t be compared against the other unless converted. As most developers seem to know by now, a function on the left side of the equals signs in a where clause can’t use an index. We didn’t put one here, but the conversion did for us… This is implicit conversion…

So how does the alternative look? The alternative is to use the right data type. Let’s correct a shot:

SET STATISTICS IO ONGOSET STATISTICS TIME ONGO DECLARE @variable VARCHAR(10)SET @variable = 'aikoqmuiby' SELECT * FROM TestTableWHERE Col2 = @Variable;

Voila! Three reads now, an index seek in the query plan and according to the time less than 1ms (0 ms elapsed). So simply paying attention to the data type means a 100x improvement in time and reads each. Implicit conversion kills; you don’t necessarily know why you are being killed.

The moral should be clear: Pay attention to your data types or suffer from Implicit Conversion.

While I have your attention regarding data types, remember that NVARCHAR takes as much space as VARCHAR. If you need the features of NVARCHAR, if you need to support UNICODE data (or have a strong chance of doing so… Being an “International” company is not a strong chance, how do you store your data? How is your data used? Ask those types of questions). Choosing NVARCHAR and never using it can cost you in terms of table size, index size, and backup size. This all means additional storage space required (Money and Physical space) and that one call to the disks brings back less information (Performance consideration).

1 thought on “SQL Server Implicit Conversion: You Could Be Suffering Right Now!”