Most businesses are rotten with Excel sheets and CSV exports from various tools and as my mentor puts it “Excel is the world’s database”. The dbatools command Import-DbaCsv enables the quick load of CSV tables into SQL Server, which then opens up the world of fast transformation and use in other tools such as PowerBI, or even just having it queryable rather than just in a file somewhere.

Import-DbaCsv is for you if any part of your job is to manually manage CSV files and you want to reduce the amount of time that you take to do that processing. Even if you are not a DBA but are interested in the data field, you can use SQL Server express for free, import and transform data using PowerShell for free, and export that data into Power BI or any other tool for free. I promise it can make your manual processes better, faster, and more resilient and save you tons of time!

In this blog post, we will audit the dbatools command Import-DbaCsv. I will test, review, and evaluate the script based on a series of identical steps. Our goal is to provide insights, warnings, and recommendations to help you use this script effectively and safely. Import-DbaCsv is a powerful tool to automate the importing of CSV data, and it works well in scheduled solutions such as daily ETL or ELT processes.

Scope

Dbatools is a community project full of bright minds. This audit series is more an informational series on useful capabilities and considerations rather than rigorous testing. This is to inspire even greater use of PowerShell and by extension dbatools as I believe it will assist in automating many tasks as a DBA.

1. Script Overview

Script Name: Import-DbaCsv

Author: Chrissy LeMaire

Description: Efficiently imports very large (and small) CSV files into SQL Server.

2. Testing Environment Setup

I created a multi-subnet lab with three developer edition SQL Server instances, one SQL Express and a network share based on this blog post by Jonathan Kehayias.

Download and Install

You are probably familiar with dbatools at this point, but if not now is the perfect time to familiarize yourself with the install and use of the module. On your test environment you can open PowerShell and run the following scripts. These will install Dbatools and add it to the path for future projects as well as import it into the current session for the purpose of testing the scripts of this blog post.

Install-Module Dbatools Import-Module Dbatools

Initial Setup

To save both me and you time, I will skip the test environment steps since they are identical here to my first post. Instead, I will share with you that this script, along with invoke-dbaquery and a few other community install tools, have been the backbone of the internal automation I have developed over the past couple of years here at Straight Path. I will write a post soon on “Building a custom SQL Server monitoring solution” which is based on a session I will be presenting at SQL Saturday South Florida with the same name, both of these are based again on the internal SQL Server monitoring process I have made to support our clients.

All this to say, I believe this tool has a lot of potential to save you time, improve your processes and services to both internal and external customers, and take something that used to be tedious and time consuming and instead make it a powerful part of your toolbox. It also works well as an entry to data engineering and pipelines as this is a very basic ETL\ELT tool.

3. Execution

Now that your environment is set up and I have thoroughly convinced you that this is one of the best commands to learn, we can get into the scenarios. For each of the scenarios I have downloaded a series of CSV formatted datasets from data.gov, which is a great place to get real, non-confidential and interesting data to work with as you learn SQL, Power BI, or data engineering proof of concepts like this post is doing.

Note that you would need to uncomment the sqlcredential argument if you needed to use a different credential than your current account. You can uncomment it by deleting # in the script, and the explanation of how to populate it is in my first post as well.

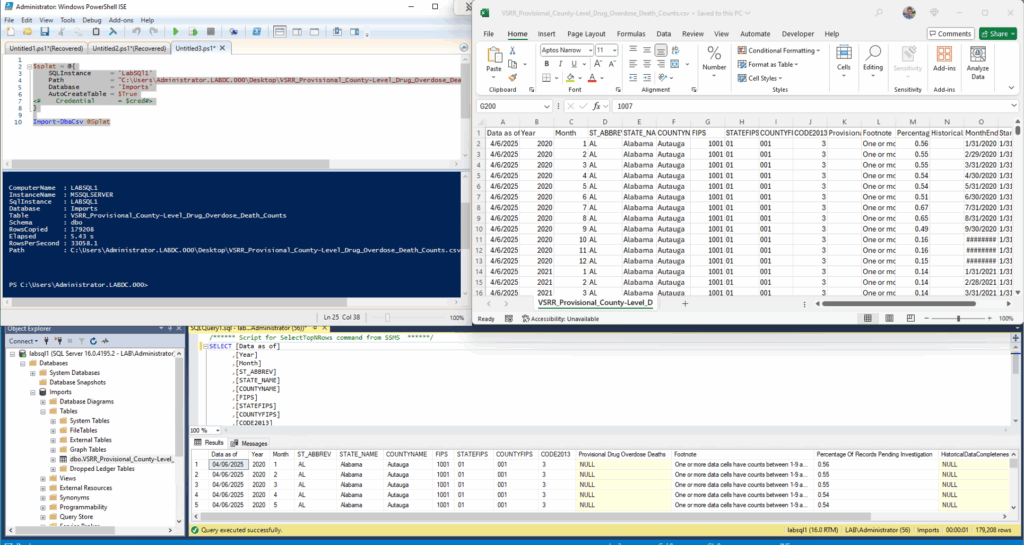

Scenario #1: Load the Entire CSV Into an Auto-generated Table

This is the straightforward discovery option. It takes the entire CSV and imports it into an auto-generated table with extremely wide data types. This is not ideal for large datasets or for long term use. Staging tables or checking how the process imports the CSV and the resulting data in the table is the ideal. Splatting is back again for readability.

$splat = @{

SQLInstance = 'LabSQl1'

Path = "C:\Users\Administrator.LABDC.000\Desktop\VSRR_Provisional_County-Level_Drug_Overdose_Death_Counts.csv"

Database = 'Imports'

AutoCreateTable = $True

<# Credential = $cred#>

}

Import-DbaCsv @Splat

Not much required here, other than the target instance, the path to the CSV File, the target database, and the autocreatetable argument. I am adding a bonus script below for this scenario to point this command at all of the CSV files in a directory with the same setup, just adding a foreach loop and replacing the path value with the path of each found file.

$folder = "C:\Users\Administrator.LABDC.000\Desktop\Multiple files"

$files = Get-ChildItem $folder

$files | Foreach-Object {

$splat = @{

SQLInstance = 'LabSQl1'

Path = $_.FullName

Database = 'Imports'

AutoCreateTable = $True

<# Credential = $cred#>

}

Import-DbaCsv @Splat

}

Results

This process ran quickly but it does use data types that aren’t great for long term performance. However, using the auto-generated table as a staging table or for discovery will prove to be an easy and fast process in addition to your daily tools, or as the root of an automated process to load data, run some processing steps on it before sending it down the line or using it for some report.

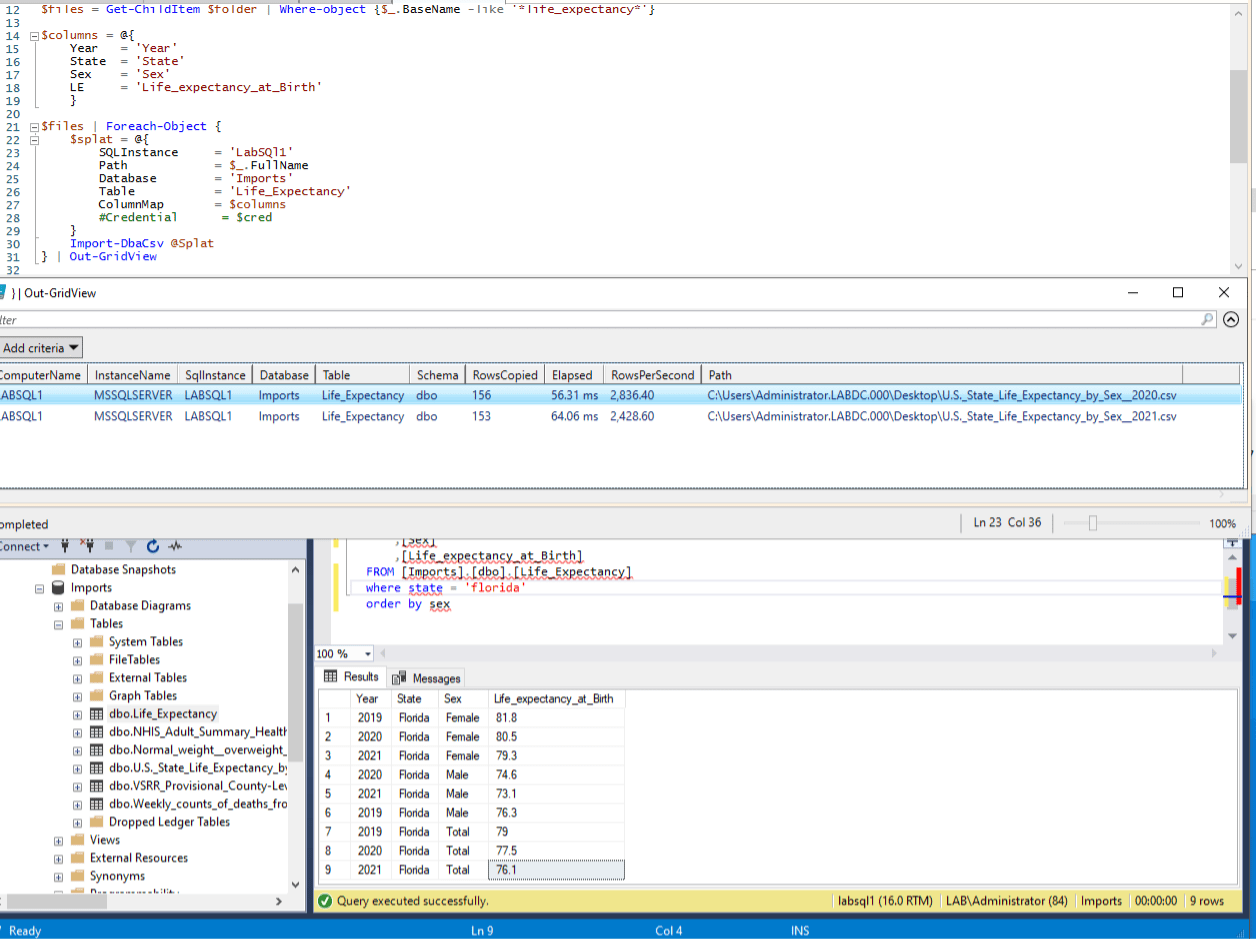

Scenario 2: Load Data Into an Existing Table

Most situations would likely move towards cutting down time and using production tables. This scenario uses a couple more of the capabilities including column mapping to reduce and transform the data before loading. This data set I have has a similar structure between years. Although, I had to add the year column to the CSV for it to work as there isn’t a built in method for adding a column with a specific value as part of the import. I would typically add the year column after loading the csv into a staging table in SQL; However, this mimicks asking for a change on the source data which isn’t impossible and I think this is a good scenario to show off for data sets that will have similar structure and will act like a feedtape due to their timestamp.

$folder = "C:\Users\Administrator.LABDC.000\Desktop"

$files = Get-ChildItem $folder | Where-object {$_.BaseName -like '*life_expectancy*'}

$columns = @{

Year = 'Year'

State = 'State'

Sex = 'Sex'

LE = 'Life_expectancy_at_Birth'

}

$files | Foreach-Object {

$splat = @{

SQLInstance = 'LabSQl1'

Path = $_.FullName

Database = 'Imports'

Table = 'Life_Expectancy'

ColumnMap = $columns

#Credential = $cred

}

Import-DbaCsv @Splat

} | Out-GridView

A few more moving pieces here. Now we have a couple of lines retrieving all the relevant files from a directory followed by a column map to use in our command. We also reused the loop from the end of the last scenario. As you can imagine, combining this as part of a process opens up a lot of possibilities. Importing data into SQL server quickly opens up the capabilities to transform values and use that data in future processes. I did not demonstrate the speed in either of my scenarios, however Chrissy’s post delves into performance and I know in my experience it is an extremely command.

Results

This is the next step for a more baked-in process. Taking columns from a known csv export and importing it into a known table will make for efficient processing, especially if there is a regular delivery of a specific CSV. I can’t emphasize enough that if you spend hours and hours on CSV processing, this command and learning a bit of T-SQL will save you so much time.

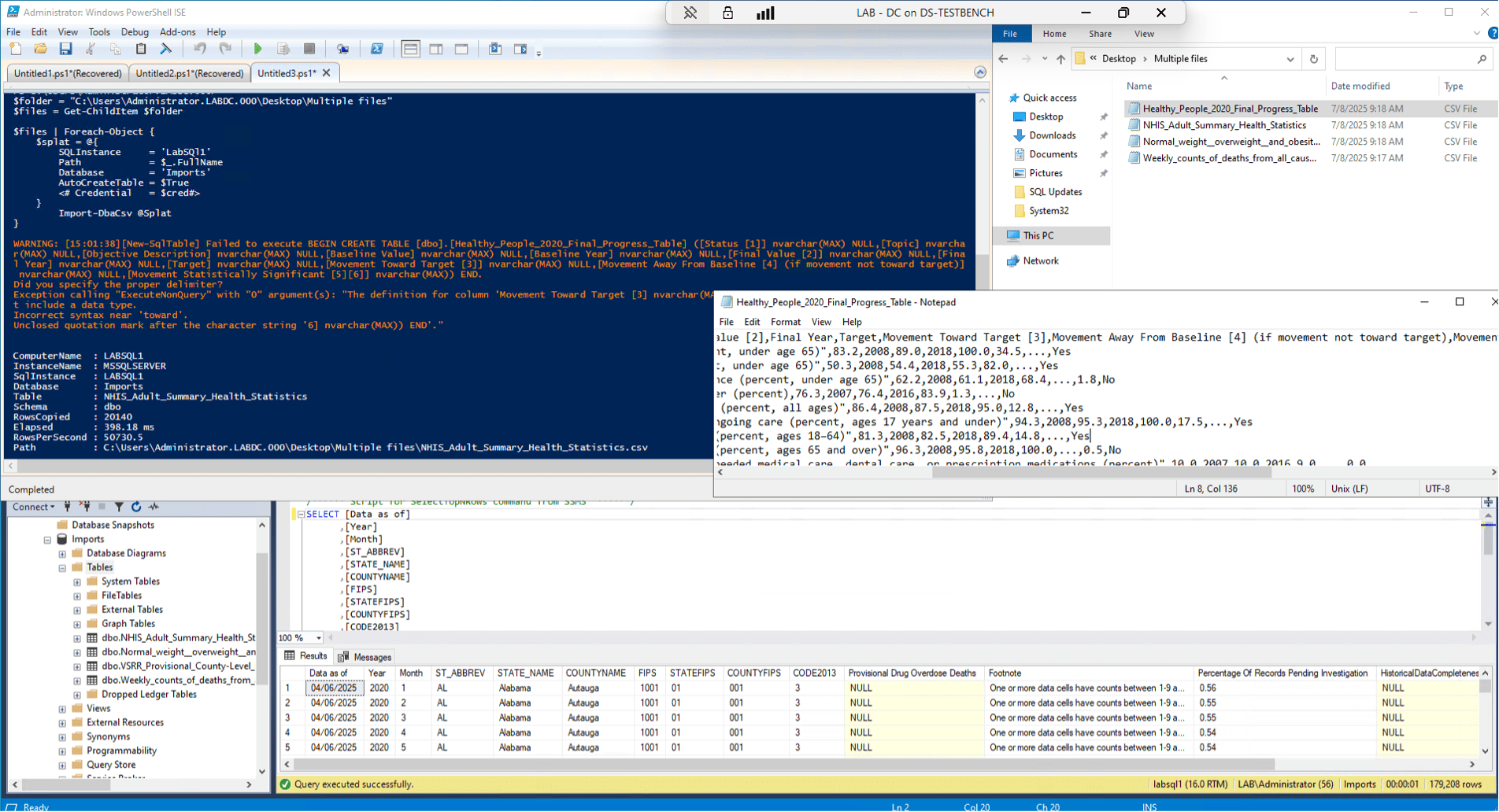

Bonus Scenario

This one had our first error, which pointed out that the file needed a simple fix in having simpler column names. The command runs all in one transaction and when it errors it rolls everything back to where it was before the transaction started. This means it is forgiving as you set this process up and learn what needs to be done or changed to enable automation. Otherwise, having a list of files to load into SQL for staging before processing them is a convenient tool over manual management in graphical excel.

Review and Analysis

There is a common theme with the commands I am choosing – that I believe them to be extremely useful to the DBA and that they should be added to your PowerShell DBA toolbox. Import-DbaCsv is another one of those specific commands that opens up the world of automation by simplifying internal processes. There are many necessary, and sometimes repetitive tasks that revolve around CSVs as exports from internal apps or custom CSVs created through homegrown processes. This command allows you to import CSVs into SQL Server allowing for faster and more comprehensive data transformation and manipulation tools than Excel would offer, as well as easier integration, alerting, and collaboration based on the data imported.

If any part of your work week involves managing or modifying CSVs, take a look at this command and brush up on your stored procedure writing skills to automate that process. If you need the final result to be a csv, you can do all of these automated transformations on the data and then using invoke-dbaquery to select on the table and output to a new CSV file. The possibilities for saving time from manual processing are nearly endless.

Warnings and Caveats

The warning here will spread across all of the commands we audit as well as anytime you use PowerShell. This script imports data to a new or existing table in a database or to a temp table. There is the possibility that you could point this script to a production table and load bad data into it which causes downstream problems. Take care that you test all automated processing steps so that this doesn’t happen.

Also, be careful to monitor the sizes and number of files you are importing and the available space on the instance. You want to avoid filling a drive as that would cause other SQL databases to be unavailable for workloads. As always, anything you build should be built in a test environment with unimportant data and the results should be reviewed before deploying to production.

Recommendations

This is a strong recommendation – if you manage CSVs in your daily or weekly processing this command can help you get it quickly into SQL Server and the automation possibilities from there are great. Stored procedures, inserting the data into different tables, running reports, and more. The scope of this command is very narrow in that it deals solely with importing data into a table from a CSV and the various tools to make the process successful, and it does it well. If you have regularly scheduled CSV management in your work environment, take some time with this command to open the door to automation and save yourself some time!