My headline is borrowed from a headline on Malwarebytes blog post. In fact, many headlines like it are across the internet right now. This post is going to sound fairly similar to an earlier post I published, “Ex-Admin Deletes All Customer Data and Wipes Servers“. It’s from June of 2017, about a hosting provider who lost nearly everything of their customers because of an admin with elevated privileges getting mad and causing havoc.

Today’s tale of woe is a bit familiar. And just like last time, I feel absolutely terrible for the company involved. So far the details released basically show

- A hacker/hackers broke into the system

- They used the multiple authentications required

- They formatted the drives of every single server, including the servers where their backups are stored

- All recent data (the past few years at least as of the time of publish) is gone

- There are no good backups to go back to

- There is a potential that mail older than 2016 may be salvageable from an older backup stored someplace offline.

- It’s not looking pretty.

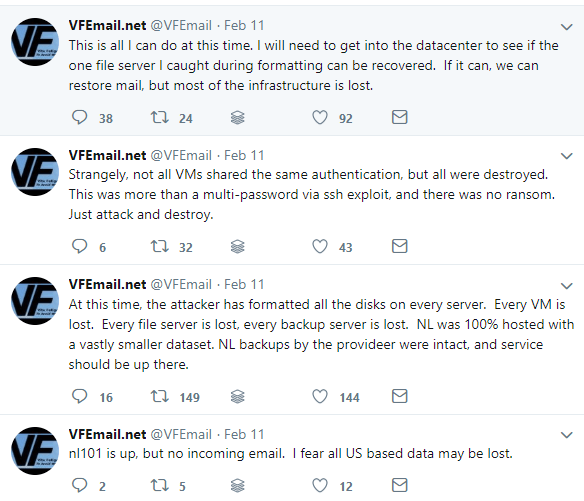

This is a recent snap of VFEmail’s twitter stream:

This blog post is going to read fairly close to the “Ex-Admin Wipes Stuff” post. You could say it’s because I’m lazy. I say it’s because the lessons are quite similar.

Database Administrators: This Is About YOU

This post isn’t about VFEmail. They have a disastrous situation and I literally just prayed for their founder and who appears to be the sole administrator staff/techie (and for their customers) – they’ll be on my mind these next few days and in my thoughts and prayers, just like with the hosting provider’s situation. It’s a crappy place to be. They owned their really bad oops publicly. They’ve been incredibly transparent, something I’m fond of – I spoke about that in a blog post many years ago about why Bill Clinton was impeached (it’s a technical blog post).

No. This blog post is about YOU, though. And don’t think you get off the hook because you aren’t a hosting an e-mail provider. And don’t now think you are off the hook because you are “just” an e-mail provider. There are some takeaways that you both need to have right now. That we all need to have.

When someone passes too early (what is too early.. That’s always a strange phrase), you’ll see someone say something like “hug your kids and spend time with your family. Well here – If you do nothing else – Go check your backups NOW. Hug your backups. Check your servers. Test a restore. That’s step 1. But that didn’t help VFEmail.

I have a question for you. This is sort of my “are you alive?” question. “Just hearing this story, how do you feel?” There are three feelings you could have, probably more, but this is my post:

- At Peace and Confident – This could be good if that confidence is well placed. Read on to see if it is.

- Nervous, A bit queasy – This is fine. This is good. Before starting a SQL Server consultancy and managing a team, building services and providing all the services we provide, I was a DBA for many years. many. Paranoia is a healthy attitude if your role is responsible for this stuff. That’s good. Read on to see how you can turn this into action.

- Indifferent – Well if you are a painter and you don’t use a hosted provider for anything (not even your e-mail or pictures and backups of documents) then that’s fine. If you are CIO, CTO, CEO, Manager, Director, DBA, Backup Admin, MSP, Host, etc – that attitude scares the living daylights out of me. You probably shouldn’t read on, because it’s stuff that just doesn’t matter from your perspective. Just copy and paste that message I highlighted above. You may need it someday.

I actually expect most folks to be in that second camp. Even the people who know that they know and trust that they are knowing correctly, probably still get a little faint when reading about these things. That’s good. That’s healthy. So this post is for you. And truth be told, it’s for me and my team and my clients, too. And it’s important. Your company’s future could be on the line. There will undoubtedly be businesses which cease operations because of this event. They will NOT recover. Their customers will not forgive them. They will be closed down. Maybe sued. Maybe bankrupt. (Now it’s possible that no one will shut down because of loss of e-mail – and local mail clients cache a lot, so a bit less risky than the hosting provider, but it’s my blog post. I can leave the hyperbole!)

This is not stuff to play around with. So my main point here is two-fold – 1.) To scare you into caring and worrying. 2.) To give you some proactive steps to direct this fear towards so it stays healthy and productive. Please don’t be like Johnny as played by Stephen Stucker in Airplane!….

Enough Preamble.

Here’s a list of some things you can do. This isn’t exhaustive. But start thinking about more what if’s and what about’s and you’ll add to it. (The list here is the big change thanks to this latest disaster. And thanks to Steve Jones putting my mind here in a recent Voice of the DBA post.

What Mindsets Should Change

- Realize you are your own advocate – VFEmail was providing mail services. Who provides your services? Do you let all the eggs stay in their basket?

- Realize your backups MAY NOT BE SAFE – This is actually a big one. A lot of folks may have their backups on separate types of storage or in separate locations inside your hosting environment or data center. If it is ONLINE and accessible to the world? It is not safe. Sadly this should now be 100% clear. It’s not truer today, but there is a clear-cut example of the point. Even if you have your data Geo-replicated into your cloud provider – if someone can get at it remotely with credentials that can be ascertained eventually, your backups that are online are NOT SAFE.

- Realize the level of security and paranoia you employ today may not be enough – “Ooh, we use ______ ” for our password safe and TFA – this is a step in the right direction and, sadly, better than 50% (or more), but you can’t rest your security posture there.

- Think about the worst-case – I’ve mentioned “Tabletop exercises” a lot lately. It’s a thing planners and organizers of various activities do. In the fire and EMS service, we will sometimes chit-chat about scenarios. What could go wrong? What could go really wrong? DBAs are good at being paranoid control freaks. When it comes to our own developers. But let’s not take certain protections for granted. It’s time to tighten our thinking and our practices.

What Should We Start Doing?

- Ask the tough questions. And don’t just ask one level of questions. Follow the stack and ask why’s how’s and what’s of your why’s how’s and what’s. “Why are we safe?” “Because we rotate our passwords every 4 hours and store them in a vault” “Why is the vault secure?” etc – keep asking the questions and get to the roots. Get to the discrete pieces, parts, and people and start improving.

- Think about Offline backups – Where is the last copy of one of your backups totally inaccessible to the internet? How much data would you lose if that is all you had? There are layers to this, too. These aren’t full proof on their own but some ideas to at least get you started:

- PULL Backups – Instead of writing them to a separate online backup location – have that location PULL. Yes, it is still online. Yes it is still a risk – but this way the other accounts and services have no permissions on the backup.

- Multiple Online Backups – Again this does NOT protect you from a prolonged and sustained attack with someone taking the time to sort out access, etc – but having multiple separate repositories can give you more protection and more time to notice and do something about an attack. It can also give you “multi-cloud” protection. I have clients who store backups in S3 and Azure Blob. Paranoid? Maybe, but they have access in the event of a serious service interruption. But yes – they are each online, so we need to come up with ways to better isolate and firewall off those other backups.

- Old fashioned off-site backups – You thought the days of tape rotations and shipping backups were over? Maybe not. Is it that unreasonable to find a way to take at least a monthly or weekly archival copy of a backup and store it to some offline location? Or asked another way, how expensive is it to lose a few years of data?

- Security – It’s time to be so paranoid about phishing, about AV, about safe browsing, about isolating networks. And this doesn’t just exist on the computer – it exists on the phone also. The mini computers we carry around everywhere – the ones that handle your multifactor authentication. Also in the realm of security is protection against social engineering. We need to train people to be distrustful. I LOVED it when I signed my team up for a service and the service e-mail went out before my e-mail. I got a few notes back “What is this? Should I click?” This awareness needs to be everywhere.

There are many more steps we can and should take. But a little bit of a pause coupled with a pinch of paranoid would do us all well right now. I’m thinking of what this attack means for the environments we support. You should be also.

It sounds like the PAM / credential store got broken into as well. This means every IT asset that was on-line was open game.

And this exposed the weakness of tape replacement devices, even ones that have replication across data centers.

Got to have the off-line backups.